Thursday, 22 November 2012

Loughborough's Department of Information Science to Close

In a statement, Loughborough says that the centre will "allow the university to better integrate the strengths it has in research, teaching and enterprise in the department of information science and the School of Business and Economics, and will build on the expertise and reputation of both".

Source: Times Higher Education

IM 1, LIS 0?

Saturday, 10 November 2012

CFP: Classification and Visualization, The Hague, 24-25 October 2013

INTERNATIONAL UDC SEMINAR 2013

Classification & Visualization:

Interfaces to Knowledge

VENUE: National Library of the Netherlands

DATE: 24-25 October 2013

WEBSITE: http://seminar.udcc.org/2013/

CONTACT: seminar2013@udcc.org

PROPOSALS DEADLINE: 15 January 2013

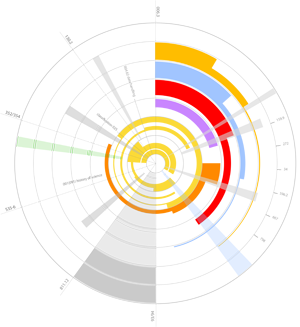

"Classification & Visualization: Interfaces to Knowledge" is the fourth in a series of International UDC Seminars devoted to advances in documentary classification research and their application in a networked environment.

"Classification & Visualization: Interfaces to Knowledge" is the fourth in a series of International UDC Seminars devoted to advances in documentary classification research and their application in a networked environment.The objective of this conference is to explore cutting edge advances and techniques in the visualization of knowledge across various fields of application and their potential impacts on thinking about developments in the more main stream bibliographic and documentary classifications.

We invite overviews, illustrations and analysis of approaches to and models of the visualization of knowledge that can help advance the application of documentary and bibliographic classification in information and knowledge discovery. We welcome high quality, innovative research contributions from various fields of application including:

- visualization of knowledge orders (e.g. scientific taxonomies, Wikipedia)

- visualization of collection content, large datasets

- visualization of knowledge classifications for the purpose of managing the classifications and working with them

- visualization of knowledge to support interactive searching, users browsing behaviour (IR) and classification as an aid to information navigation

We will accept two kinds of contributions: conference papers and posters. Authors should submit a paper proposal in the form of an extended abstract (1000-1200 words including references for papers and 500-600 words for posters). The submission form is provided on the conference website.

Conference proceedings will be published by Ergon Verlag and will be distributed at the conference.

IMPORTANT DATES:

15 Jan 2013 Paper proposal submission deadline

15 Feb 2013 Notification of acceptance

15 Apr 2013 Paper submission

ORGANIZER: International UDC Seminar 2013 is organized by the UDC Consortium (UDCC) and hosted by Koninklijke Bibliotheek (The National Library of Netherlands). UDCC is a not-for-profit organization, based in The Hague, established to maintain and distribute the Universal Decimal Classification and to support its use and development.

Wednesday, 17 October 2012

UKeiG announces Tony Kent Strix Award 2012 Winners

The UKeiG Tony Kent Strix award 2012 therefore goes jointly to Doug Cutting and Professor David Hawking.

More...

Wednesday, 5 September 2012

The Shape of Knowledge - review of event

The Grammar of Graphics

The first speaker, Conrad Taylor, introduced us to the Grammar of Graphics. He opened with a Venn Diagram showing the intersection of Information Designers, IT people, and Information and Knowledge Managers. He pointed out that they don’t spend enough time talking to each other and the whole information community would benefit from fewer silos and more inter-disciplinary engagement.Visual reasoning is aeons old, and common to most of the animal kingdom. Humans navigate the world and gain huge amounts of information in a “blink of the eye”. However, there has been little research on the grammar and semantics of presenting visual information. Communication goes beyond use of language, but includes visual cues such as gesturing; drawing is often an extended form of gesturing, with the movement conveying the meaning perhaps more so that the resulting sketches. However, there has been a lack of research into visual intelligence grammar of images and semantics of imagery.

Often the ownership of the technology affects the nature of the communication, so tools like Mind Maps are a commercial product. However, in pre-literate communities creating graphics by drawing in the sand and using stones to represent ideas can be very effective and egalitarian as no-one owns the technology of expression.

Conrad offered an overview of the history of information visualization, mentioning the Tree of Porphyry, medical diagrams by Ibn al-Nafis, Agricola’s De Re Metallica, Ebstorfer’s Mappa Mundi, and Mohammad Al-Idrissi’s map of Europe. In the 18th and 19th centuries people started to produce data graphics, examples include Joseph Priestley’s biographical timelines, William Playfair’s line, bar, and pie charts, Charles Joseph Minard’s graphic (1869) showing the advance and retreat of Napoleon’s army, John Snow’s map of cholera cases in London, Otto and Marie Neurath’s diagrams, and Florence Nightingale’s coxcomb charts – an early example of “socioinformatics”.

There are pitfalls with graphics, however, choropleth maps can mislead and designers will often think they are scaling up linearly – e.g. to make a shape twice as big, but observers see the shape as representing four times as much. Not many people have tried to unify the field of information visualization – cartographers tend to analyse cartography, chart people talk about charts, computer scientists talk about on-screen design. However, Conrad mentioned a number of writers including Jacques Bertin, Edward Tufte, Jan V White, Gene Zelazny, Doug Simonds, Clive Richards, Michael Twyman, B Tversky, Robert Horn (Visual Language), Alan MacEachran (How Maps Work), Colin Ware (Information Visualization), and Yuri Engelhardt (The Language of Graphics).

He discussed symbology, compositionality, and Jacques Bertin’s “retinal variables” and presented various examples to illustrate how visual elements are put together – as a form of grammar, and how vocabularies such as line weights and thicknesses in network diagrams are another way of adding extra meaning to graphics.

Mapping Software

Martin Dodge of the University of Manchester, whose work includes the Atlas of Cyberspace and the Codespace project, discussed the problems of trying to represent the Internet as a map. Online spaces are not like Euclidean space, much of the web is “dark”- inaccessible for reasons of security, copyright protection, or just not easy to index. It is also so huge that you can’t download it into something else in order to run algorithms on it or process it in order to make a smaller or compressed model. One issue is that people download millions of web pages and throw algorithms across the data without really knowing what they want to see as a result. It is easy to create visualizations based on metaphors that actual confuse more than they clarify. One way to represent the web might be with 3D “fly thru” maps, but these are very hard to display on a flat screen.Maps are an important part of our cultural psyche, determining how we see the world, but it is very easy to create maps and graphs that look wonderful but fail to convey any information. There are software packages that produce lovely visualizations of data, but without thought and user testing they may be meaningless or misleading.

Martin offered examples of cadastral maps – treemaps, hierarchical tiling, space filling maps, and landscape maps – built using clustering, self-organizing algorithms. He pointed out that these visualizations are possible but the Web looks pretty similar to how it did in 1993, with pages being the basic way information is accessed. We have not yet found a “tube map” for the Web and he questioned whether “cyberspace” is a bit of a red herring, and that it makes no sense to think of the Web as “space” at all.

He suggested that it might be more interesting to make a map of the Web and the world that would make sense to machines and that when we have ubiquitous computing and devices controlling the real world everywhere, we will need maps that those machines can use. James Birdle and Bruce Sterling have written in this area and there is a new field of research – software studies – with people like Matthew Fuller and Lev Manovich investigating how software is affecting culture and society. There is a “code space” that needs to work otherwise real world objects being controlled by software will literally crash. He suggested that rather than mapping the Web, it might be interesting to map a specific device, such as someone’s smartphone, which carries data about their movements, contacts, conversations, etc. and that we may end up with software that maps itself as it goes along. This could potentially have huge ethical implications. Other maps that might be interesting would be how a phone moves through “Hertzian space” - the world of electromagnetic waves and signals.

Touch technologies

Marianne Lykke of the University of Aalborg described an experiment to investigate the use of touch technologies in taxonomy building. It was interesting how themes mentioned by Conrad were reinforced by her findings. For example, touch technologies encourage egalitarian collaboration – like the drawings in sand – as quieter or shyer members of the group find it easier to join in with moving objects on a screen than speaking out in a group discussion or workshop.Marianne explained how she had used touch technologies to replace card sorting techniques as part of the creative and analysis phase of designing a new knowledge organization system. The technologies were useful in finding user-friendly concepts, understanding user preferences, and selecting preferred terms.

She used Virtual Understanding Environments and Notebook, which were lightweight, easy to use, and to play with and interact with in groups. They helped participants more quickly come to a shared understanding than using paper-based techniques, enabled previous versions to be saved and stored more easily than with paper, and enabled embodied conversation as the group could work together.

Limitations were that only a few terms could be managed at a time and the technologies at the moment are only suitable for a small number of users, as the users have to be able to crowd round the screen.

Data journalism

Lisa Evans explained Data Journalism at The Guardian. She provided lots of examples of data-driven stories. One of the most popular is a visualization of government spending. As The Guardian has produced this every year, the team can now run comparisons and show trends over time.They also build interactive tools – such as a tool that allowed you to “play chancellor” and allocate spending to different government departments. Data analysis can be used to check claims made by companies as well as governments, which the public would be interested to know. For example, they discovered that despite claims by the drinks company Innocent that they would donate some of the purchase price of bottles sold to charity, they had not in fact made any donations.

A map of where people involved in the 2011 riots were from showed that they were overwhelmingly from areas of social deprivation, despite government insistence that poverty was not a causal factor in the rioting.

The data team use a lot of tools, such as Many Eyes and Google Maps, but are also looking for Open Source tools. Better, faster tools and techniques are needed, as data analysis takes time but news demands rapid responses to events. Data journalism is in its infancy and already is proving very popular, with the data blog being the second most popular page on The Guardian’s website.

Follow the data team on twitter: @datastore @objectgroup.

Visual presentation of environment and human health data and information

Will Stahl-Timmins of the University of Exeter demonstrated his visualizations of health and climate change information and how these have been used to help people understand complex data. However, there is a danger that categorizations of data can be misleading, and with a graphic it is very easy to give a false impression.He showed an example of a chart that showed UK carbon emissions by industry sector, but the “transport” category did not include international flights, which could lead people to jump to the wrong conclusions about the environmental impact of air travel.

Sometimes there has to be some “designers’ licence” to make graphics clear. He demonstrated a graphic that combined data about how close people live to sea with how they report their health. The trees were not in proportion to the distances from the sea, because they were not drawn to scale so that they would be visually pleasing and make it easy for viewers to spot where the “land” was.

He then talked us through a study assessing how well people understood a complex report about climate change written in words compared with a graphic representing the data in the report. People absorbed information form the graphic far faster than from the text, but it was not clear whether they had understood as much detail.

Summary and questions

The afternoon concluded with questions and comments from the audience, and it was noted that a balance has to be found between using people who understood the subject matter and people who were good graphic designers, in order to produce visually pleasing graphics that were also informative. However, more and more scientists are seeking to collaborate with designers to help them find interesting and aesthetically pleasing ways of presenting their data.Friday, 27 July 2012

INTRODUCTION TO INFORMATION SCIENCE

INTRODUCTION TO INFORMATION SCIENCE

By David Bawden and Lyn Robinson

'I believe this book is the best introduction to information science available at present. It tackles both the philosophical basis and the most important branches, and it is based on solid knowledge about the contemporary literature of the field. If students have the knowledge provided by this introduction, this would be a fine basis on which to go further with specific problems.' - Birger Hjørland, Royal School of Library and Information Science, Copenhagen, Denmark'.

Further info...

Monday, 23 July 2012

ICoASL 2013: 3rd International Conference of Asian Special Libraries, April 10-12, 2013

Library and Information Science Professionals are welcome to contribute papers for presentation on the below themes and related themes. The Papers should be based on research surveys; case studies or action plans rather than theoretical explanations.

Main theme is “Special Libraries towards Achieving Dynamic, Strategic, and Responsible Working Environment”.

Further information

Tuesday, 10 July 2012

A New OpenCalais Release On the Way

OpenCalais, Thomson Reuters' entity extraction tool is due for a makeover. A new beta release is to be available in early August. A number of new entities, facts and events related primarily to politics and intra and international conflict will be extractable.

Further info...

Friday, 22 June 2012

OCLC adds Linked Data to WorldCat.org

Commercial developers that rely on Web-based services have been exploring ways to exploit the potential of linked data. The Schema.org initiative—launched in 2011 by Google, Bing and Yahoo! and later joined by Yandex—provides a core vocabulary for markup that helps search engines and other Web crawlers more directly make use of the underlying data that powers many online services.

[more...]

Friday, 8 June 2012

Review of Welsh/Batley “Practical cataloguing”

Anne Welsh and Sue Batley

ISBN: 978-1-85604-695-4

Monday, 28 May 2012

I think therefore I classify - next ISKO UK event - July 16

The next ISKO UK event (a joint event with the BCS Information Retrieval Specialist Group) will be on Monday July 16th, in London. It is a one-day seminar on the continuing need for classification, exploring how it is taught and how it is changing to meet the needs of an increasingly online world.

The event offers to the chance to hear from leading speakers talking about the philosophy, teaching, and applications of classification and how researchers, teachers, and practitioners can adapt to meet the new classification challenges posed by the Semantic Web. Participants will be able to join a number of breakout sessions to explore themes in more detail as well as investigate automated classification systems in vendor demonstrations. Lunch is included in the cost (only £25 for members and £60 for non-members) and the day will end with a chance to network over wine and nibbles.

For full programme details, speaker biographies, and booking form see the main event page.

Wednesday, 11 April 2012

Call for nominations: 2012 UKeiG Tony Kent Strix Award

Thursday, 29 March 2012

Review of On Location event

The first speaker was Mike Sanderson of 1Spatial, who described using geo-spatial data as helping to power a European knowledge economy. He spoke of the need for auditing and trust of data sources, particularly ways of mitigating poor quality or untrustworthy data. At 1spatial, they do this by comparing as many data sources as they can to establish confidence in the geodata they use. Confidence levels can then be associated with risk, and levels of acceptable risk agreed.

Alex Coley of INSPIRE talked about the UK Location Strategy, which is aiming to make data more interoperable, to encourage sharing, and to improve quality of location knowledge. The Strategy is intended to promote re-use of public sector data, and is based on best practice in linking and sharing to support transparency and accessibility. Historically there has been a lot of isolated working in silos, and the aim is to try to bring all such data together and make it sharable. This should help organisations to cut costs in technology support and reduce unnecessarily duplicated working. Although some organisations need to have very specialised data, there remains much that is common. Location data is frequently present in all sorts of data sets, and can be re-used and repurposed, for example to help understand environmental issues. Location data can be a key to powering interesting mashups - for example someone could link train timetables with weather information, so train companies could offer day trips to resorts most likely to be sunny that day.

The Location Strategy's standardisation of location data is effectively a Linked Data approach, but so far little work has been done to map different location data sets.

Data that is not current is generally less useful than data that is maintained and kept up to date, so data sets that include information about their context and purpose are more useful.

Jo Walsh of EDiNA showcased their map tools. EDiNA is trying to help JISC predict search needs and provide better search services. They are trying to take a Linked Data approach, but there is a need for core common vocabularies. EDiNA runs various projects to create tools to help open up data. Unlock is a text mining tool that helps pick out geo location data from unstructured text. It could be used to add location data as part of digital humanities projects.

One aspect of Linked Data that is often overlooked is that it can "future proof" information resources. If a project, or department, is closed down, its classification schemes and data sets can become unusable, but if stable URIs have been added to classification schemes there is more chance that people in the future will be able to use them.

Matt Bull of the Health Protection Agency explained how geospatial data is useful for health protection, such as tracking infectious diseases, or environmental hazard tracking. Epidemiology. Data is inherently social. Diseases are often linked to the environment - radon gas, social deprivations - and clinics, pharmacies, etc. have locations. This can be used to investigate treatment seeking behaviour as well as patterns of infection, in order to plan resourcing. For example, people often don't use their nearest clinic especially in cities, to seek treatment for sexually transmitted diseases. Such behaviour makes interpretation of data tricky.

Geospatial data is also useful for emergency and disaster planning and monitoring the effects of climate change on the prevalence of infectious diseases.

Stefan Carlyle, of the Environment Agency (EA), talked about the use of geospatial data is in incident management. One example was using geospatial information to model risk of failure of dam and plan an evacuation of the relevant area. Now it is a quick and easy operation that would not have been possible in past without huge effort. Risk assessments of flood defences can now be based on geospatial data and this can help prioritise asset management - e.g. management of flood defences.

Implementing semantic interoperability is a key aim, as is promoting good quality data, and this includes teaching staff to be good "data custodians". Provenance is key to understanding the trustworthiness of data sets.

The EA is focussing on improving semantic interoperability by prioritising key data sets and standardising and linking those, rather than trying to do everything all at once. Transparency is another important aim of the EA's Linked and Open Data strategy and they provide search and browse tools to help people navigate their data sets. Big data and personal data are both becoming increasingly important, with projects to collect "crowd sourced" data providing useful information about local environments.

The EA estimates that its Linked Open Data approach produces about £5 million per year in benefits from reduced duplication of work and other efficiencies such as unifying regional data silos, and from sale of data to commercial organisations. The EA believes its location data will be at the heart of making it an exemplar of pragmatic approach to open Data and transparency.

Carsten Ronsdorf from the Ordnance Survey described various location data standards, how they interact, and how they are used. BS7666 specifies that data quality should be included, so the accuracy of geospatial data is declared. Two key concepts for the OS are the Basic Land and Property Unit and the Unique Property Reference Number. Address data is heavily standardised to provide integration and facilitate practical use.

Nick Turner, also of the OS, then talked about the National Land and Property Gazetteer. The OS was instructed by the government to take over UK address data management because a number of public sector organisations were trying to maintain separate address databases. The OS formed a consortium with them and formed AddressBase.

AddressBase has three levels - basic postal addresses, AddressBase plus which includes addresses of buildings such as churches, temples, and mosques, and other data, and AddressBase premium, which includes details of buildings that no longer exist and buildings that are planned.

AddressBase is widely used as it allows organisations to refer to AddressBase to verify and update or to extract other address information that they need when they need it, rather than having to manage it all by themselves.

Tuesday, 28 February 2012

ISKO UK event: On Location: organizing and using geospatial information

Wilkes Room - British Computer Society London Office

In this ISKO UK and BCS joint meeting, we will hear from experts about the current geospatial information landscape and its challenges, some of the standards and frameworks that have been put into place to ensure interoperability and the potential for linking data. We will also hear how some users of GIS systems have applied them in their own organizations.

The event is free to ISKO and BCS members and to full-time students. The fee for non-members is just £40, payable in advance. Registration opens at 1.45, immediately following the ISKO UK AGM, and we shall start promptly at 2 p.m. The programme will be followed by a chance to network, with wine and nibbles.

For full details and booking go to: http://www.iskouk.org/events/location_march2012.htm